Today’s mission was to deliver a good solution to use Microsoft EventHub with our applications. But before I go farther, why do we use EventHub?

At work, we have several web applications that need to pass data to each other. The two we already have are still tightly coupled in a way that a chance to one application affects another one. But now that many other apps may need to share data across, and keep them loosely coupled, the solution was to use Microsoft Eventhub:

Event Hubs is a fully managed, real-time data ingestion service that’s simple, trusted, and scalable. Stream millions of events per second from any source to build dynamic data pipelines and immediately respond to business challenges. Keep processing data during emergencies using the geo-disaster recovery and geo-replication features

https://azure.microsoft.com/en-us/services/event-hubs/Essentially, we are using EventHub as the central place where data is being sent to by producer applications, then consumed by consumer applications.

Today’s Task:

One of our producer applications is built in Net Core. We wanted this to send events to eventhub, so other applications can consume them. However, we want this to be efficient, to not slow the entire application down (at least not too much). We also want to see events in EventHub relatively fast. Another requirement was that we need to make sure we don’t lose events when some Azure instances restart or get terminated.

Solutioning

1. Latency

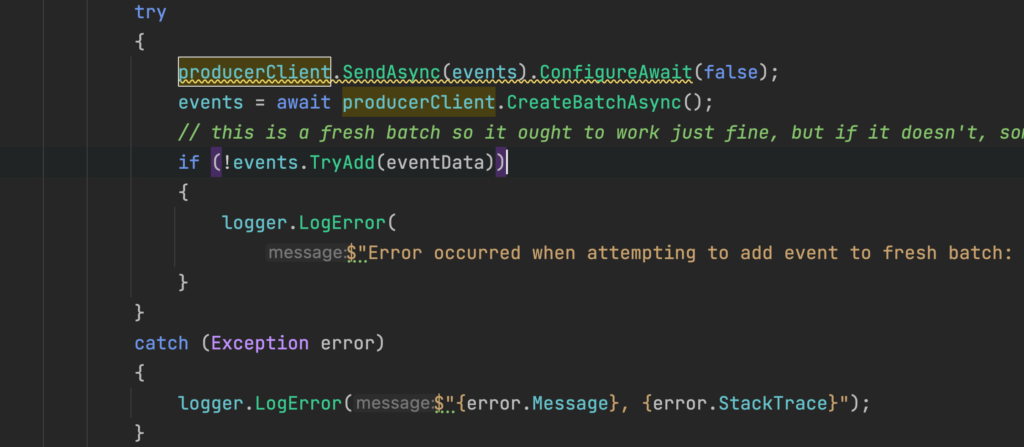

From our first requirement “efficient and keep latency low” this meant something to me. Every request that comes to this application, and causes events to be generated, don’t need to await for events to be sent over to EventHub. Essentially this means to asynchronously process events, return response to use while events are still being streaming. Example of the code:

As you can see in the picture, the yellow underline is complaining that since we are not awaiting for the task, the execution will continue while this task is going on,, that we should consider to await it.

But that is the thing, we do not want to await, because we don’t care if the task is done now or 5 minutes later, we don’t want to slow the requests down awaiting for events to be sent (realistically it’s a few milliseconds. It could be slower if we sere sending events over public network, but all this is happening in Azure. See https://docs.microsoft.com/en-us/azure/active-directory/managed-identities-azure-resources/overview for more).

1.1 Singleton or Transient?

I created a class, EventHubUtility.cs, responsible to send events to EventHub. The class will be responsible for batching, and retries in case we get a failure. Initially, I had Eventhub utility class be added to Depency Injection as Transient. This meant that every request will have its copy of EventHubUtility class, process its events, then return response.

But when I tested this out, something weird happened. Since we are not awaiting for the task of sending events to terminate, as soon as request gets returned, C#’s garbage collection starts. What would happen is that some objects would be deleted while events are still trying to send, or network gets terminated with error like this: amqp connection is closed

Because of that issue, we had to either await for Task to finish then return response, or……(drum rolls)….make the whole thing Singleton. This was genius, because

- We don’t need to wait for Task to send events before we return response.

- We don’t need to recreate EventHubUtility on each requests, therefore saving us some performance time by reusing that singleton

- We can batch events across different request, therefore we make less calls to Eventhub!

2. Reliability

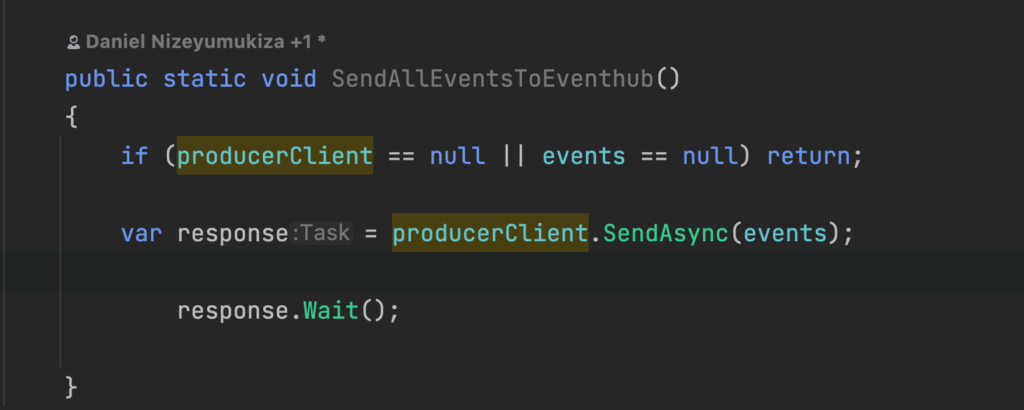

The second request meant to keep the system reliable. We really don’t want to lose data when instances restart or get terminated. In .Net terms, it meant to use a Finalizer.

A finalizer is a function that gets called when the application is about to be terminated, when the machine is rebooting for instance in Azure.

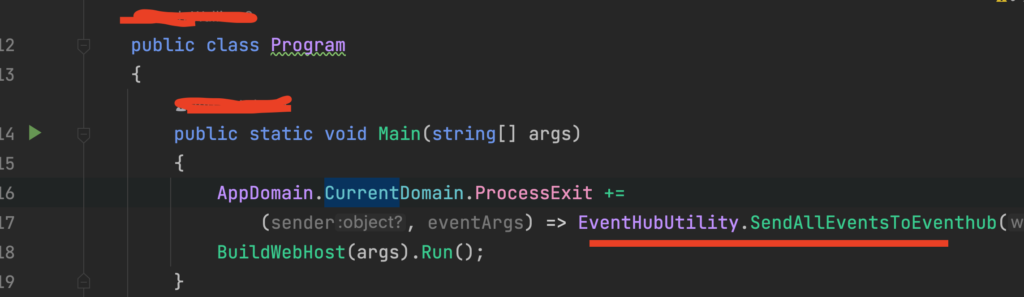

Since this function will be called to send all events it has, we will not be responsible to call it, but that Azure instance about to die should call the function to send all events. To achieve that, we first define the function, then we register the function in Startup.cs and make sure it gets called.

Function was something simple like this:

We await this one, because we don’t want to tell the machine to shut down while we are still sending data.

And we register that in Startup.cs

However, the thing with finalizers, you have guarantee that they will get called when the application is about to die, but there is no guarantee the machine will wait for the application to finish. Therefore, the solution above is not enough to guarantee minimum data loss in case if machine restart.

In addition, if the machine was forced to shut down (like how you press power button), we would lose any events that weren’t sent over to Eventhub.

2.1 Reliability++

The issue above can’t be easily solved. We can minimize the amount of data we can lose by making the batch smaller, therefore it fills up often, or we can send events often, even before the queue is full.

I decided to go with the later!

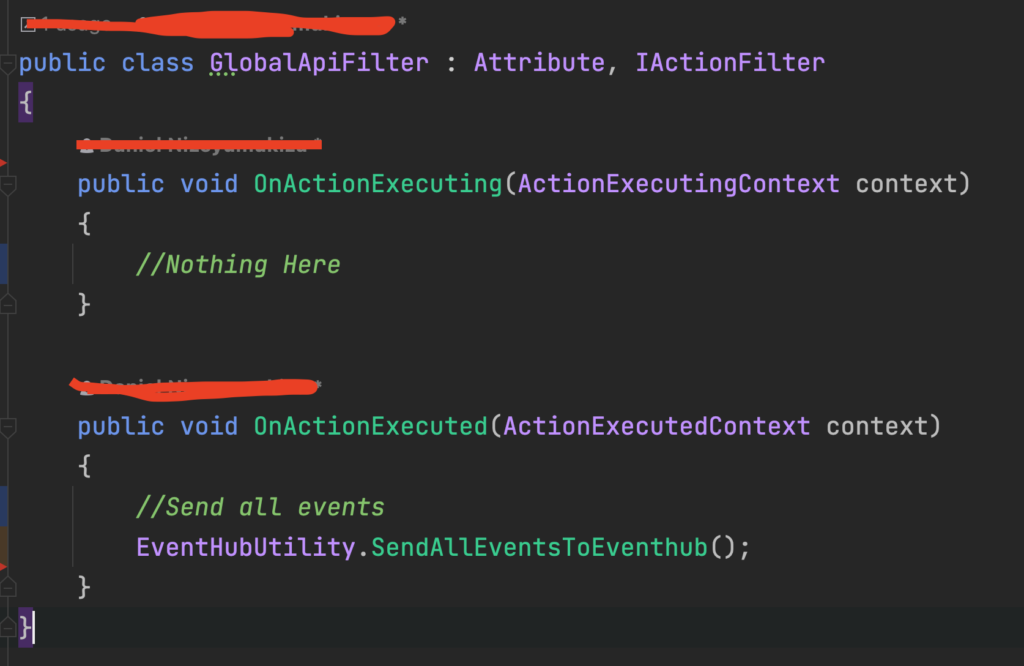

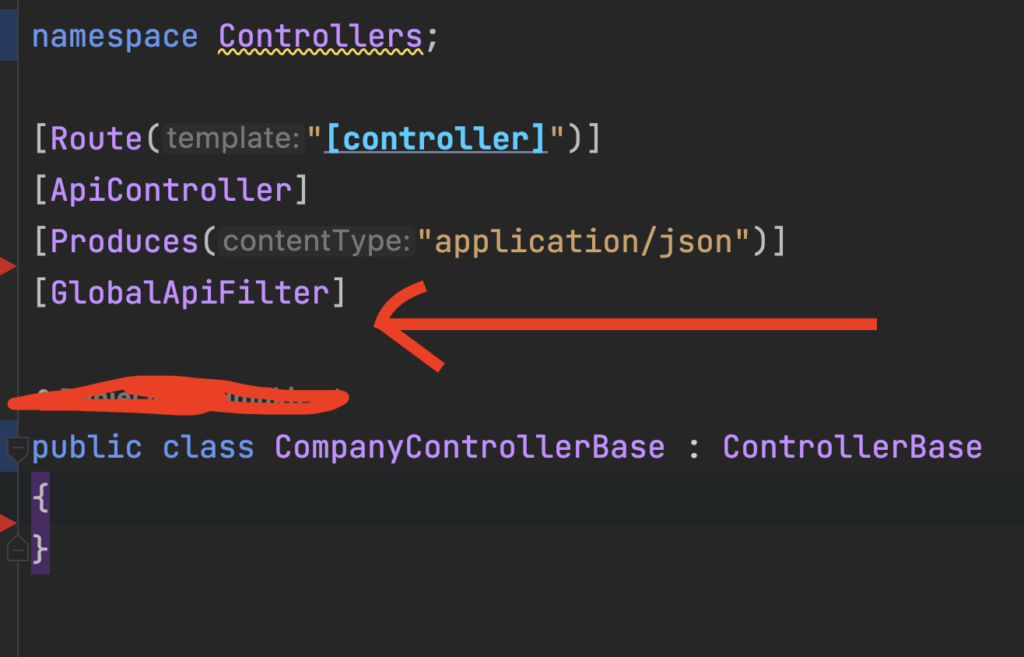

In Net Core you can have an attribute that will execute every time a given action, or controller gets run (More on Action Filters: https://docs.microsoft.com/en-us/aspnet/mvc/overview/older-versions-1/controllers-and-routing/understanding-action-filters-cs)

I created action filter on base controller, and have all controllers extend it. The intent was every time a response is about to be sent back to the caller, tell EventHubUtility to send whatever events it has.

This means that if we have let’s say 30 events in the queue, and our queue is waiting for 100 events, then we will send 30 events when response returns. However, if we have 340 events getting processes across the requests, by the time request returns we would hopefully have only 40 events (batch were sent 100 at a time, and 40 remains) in the queue, then we call function to send all remaining events before we return response.

At this point we are golden, right? All requests will call EventHubUtility to send all events it has in the queue, that way if the batch is not full then there is no need to be sitting on it, because if the instance failed, we would lose events that are sitting in the queue.

This solutions felts like good enough, between sending each event by itself but batch them instead, and instead of just sitting on the queue, send al remaining events as request returns a response.

Production Down

You know one of engineer’s nightmares? having Production going down due to the code you deployed! TO make the matter worse, if you didn’t put everything behind feature flag, then you are tossed.

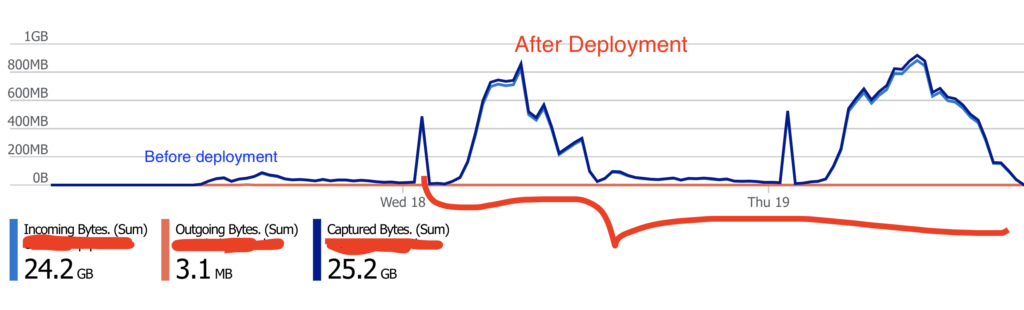

After deploying the awesome design I described above, I was shocked to see the results. This is the chat showing the events as they come to EventHub:

There is nothing wrong in having thousands events per minute, as long as the events are legit. With my awesome release, we are averaging 20K events per minute, all from about less than 200 users on site a minute. Obviously there is no way each user was generating 100 events per minute (Given that we are only emitting a few events depending on what a user does on site).

What happened, as requests come in and all tell EventHubUtility to send events (remember that it is Singleton, using 1 queue), EventHubUtility sends the queue multiple times. Here you have like 200 threads (200 users with their requests), all telling EventHubUtility (singleton) to submit whatever it has in the queue right away. To solve this we would probably fix concurrency (More on that: https://docs.microsoft.com/en-us/ef/core/saving/concurrency). Essentially you need to let EventHubUtility send events once, and until it is done, no other requests can issue that request.

The other alternative would be making EventHubUtility transient instead of Singleton (More on that: https://docs.microsoft.com/en-us/dotnet/core/extensions/dependency-injection-usage ).

With transient, each request gets its own copy of EventHubUtility, with its own Queue. Each request sends its own events. We need to be careful and actually await for events Task, otherwise garbage collection starts as soon as a response is returned, which causes the error we saw above.

Feature Flags Save the Day … Kinda

Two things had to be fixed, and fixed yesterday. 1. Hide everything behind feature flag 2. Prevent controllers from making calls to eventhub at every request. To be safe, we had to add a flag to turn off events entirely. I used LaunchDarkly to hide those behaviors. If you need to see how I implemented that in Net Core, please leave a comment. With the fixes above, I am hoping duplicate events do not happen again (at least not as much because we are batching events, and sending them when batch is full)!

I deployed everything, tune in later to learn what happens. Fingers crossed!